Docker DNS & Service Discovery with Consul and Registrator

Consul is awesome, and super powerful, but takes a bit of understanding and setting up. We are looking at it now, because it could let us keep the same mechanism in place in development as we might use in production.

What is Service Discovery?

Service Discovery sounds pretty scary, and likely recalls the nightmares of old (like UDDI and WS-Discovery). However, it can also refer to much nicer things like DNS and DHCP. So why do we need it? It never seemed all that useful in the old J2EE days….

Well now that we’re packaging all our apps (and small services) in containers, these apps all need to know where to find each other! This is even more clear inside of the Docker environment, as the daemon will use DHCP to dynamically allocate internal IP addresses and port numbers (if you don’t specify them explicitly).

How can we do it?

So, what can we do about it? A simple solution would be to use DNS. This could be in the form of explicitly writing out entries to /etc/hosts everywhere, or running a simple DNS server. The issue is then – how do we keep these things up to date, as containers (apps) are created and destroyed?

There are some DNS-based solutions (e.g. dnsmasq, docker-dns, skydns), and then there is a whole separate class of solutions, which are backed by distributed key/value stores, such as Zookeeper, etcd and Consul

Consul

Consul is one such system that provides a distributed K/V store. It uses the Raft consensus algorithm. This ensures that all the nodes agree on what value a key has, across the cluster, in a fault-tolerant way.

So what does this have to do with DNS?

The idea is, that services can register themselves, and this information is stored in the Consul cluster. Any system can then look up this information, and get the right answer back, even in the face of Consul nodes becoming unavailable.

The neat thing about Consul (as compared to Zookeeper or etcd), is that it can be queried like a DNS server (as well as via its REST interface).

Okay, lets get started!

There are a few things we’ll need to get going:

- Docker

- https://github.com/gliderlabs/docker-consul – Consul packaged as a Docker image

- https://github.com/gliderlabs/registrator – Automatically monitor container starts/stops

- A test dockerized application

First, we need to get boot2docker behaving in a more predictable way. By running boot2docker config we can see that the configuration file is at /opt/boxen/config/docker/profile.

We want this file to look like:

VM = "boxen-boot2docker-vm"

SSHKey = "/opt/boxen/data/docker/id_boot2docker"

HostIP = "10.0.0.1"

DHCPIP = "10.1.1.1"

NetMask = [255, 0, 0, 0]

LowerIP = "10.0.0.2"

UpperIP = "10.0.0.2"This means that when we do a boot2docker init && boot2docker up we will always get 10.0.0.2 as the ‘public’ IP address of the docker daemon.

Building and Running Consul

$ git clone https://github.com/gliderlabs/docker-consul.git

$ cd docker-consul

$ vim 0.5/consul-server/config/server.json

# Add "data_dir": "/var/consul", to that file

$ mv Makefile 0.5/

$ cd 0.5/

$ make

You should now have gliderlabs/consul-server visible when you run docker images.

Now, run:$ docker run -d -p 172.17.42.1:8500:8500 -p 172.17.42.1:53:8600/udp -p 10.0.0.2:8500:8500 -p 8400:8400 gliderlabs/consul-server -node myconsul -bootstrap -advertise 10.0.0.2 -client 0.0.0.0

Let’s break this down a little:

-drun in detached mode-p 172.17.42.1:8500:8500– This is binding Consul’s HTTP port to Docker daemon bridge (docker0) address-p 10.0.0.2:8500:8500– Make this available to the public side too (so we can use the Web UI in dev)-p 172.17.42.1:53:8600/udp– Binds port 53 (default DNS port) for UDP onto the bridge address. Consul serves DNS on port 8600 on the container. This means that it is available as DNS to all the other containers on the expected port-p 8400:8400– This is Consul’s RPC port.gliderlabs/consul-server– the name of the docker image to run-node myconsul– Set the name of the node-bootstrap– We are starting a Consul cluster (of one node) from scratch, rather than joining an existing cluster. We must bootstrap it.-advertise 10.0.0.2– From the docs: “The advertise address is used to change the address that we advertise to other nodes in the cluster”. This also changes the address that services on that node advertise as, otherwise they expose their internal Docker IP address, which is usually not reachable by other apps/systems.-client 0.0.0.0– Consul should listen on all interfaces. It defaults to localhost only by default.

Phew!

Registrator

Okay, let’s run registrator! This is a little simpler:

$ docker run -d -v /var/run/docker.sock:/tmp/docker.sock gliderlabs/registrator consul://172.17.42.1:8500

-drun in detached mode-v /var/run/docker.sock:/tmp/docker.sock– This mounts the docker daemon socket into the container, so it can monitor the API for start/stop events from other containers.gliderlabs/registrator– the name of the docker image to runconsul://172.17.42.1:8500– Tell registrator that we’re using Consul (and not etcd!), and to look for it on the bridge address

Let’s take a look at what we have

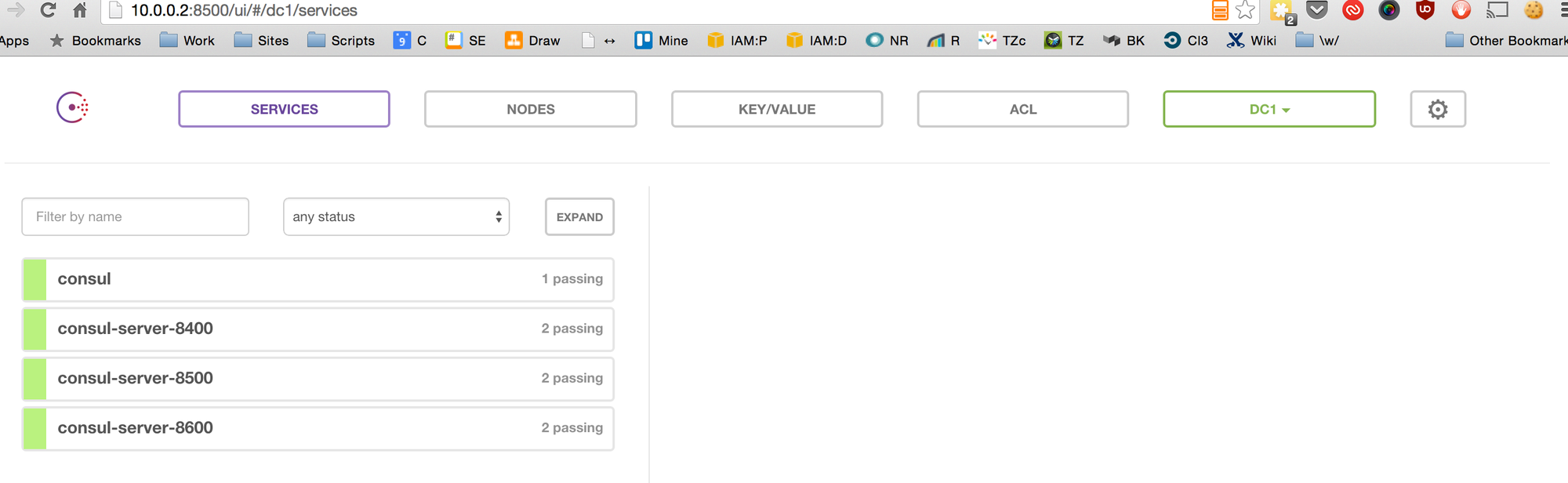

Go to http://10.0.0.2:8500/ in your browser

Okay, we can see that Consul is running, and that registrator has picked up it’s exposed services.

Running your own application

docker run -d -p 8080:8080 hello-world

-drun in detached mode-p 8080:8080– We want to expose our app on port 8080hello-world– our application’s image name

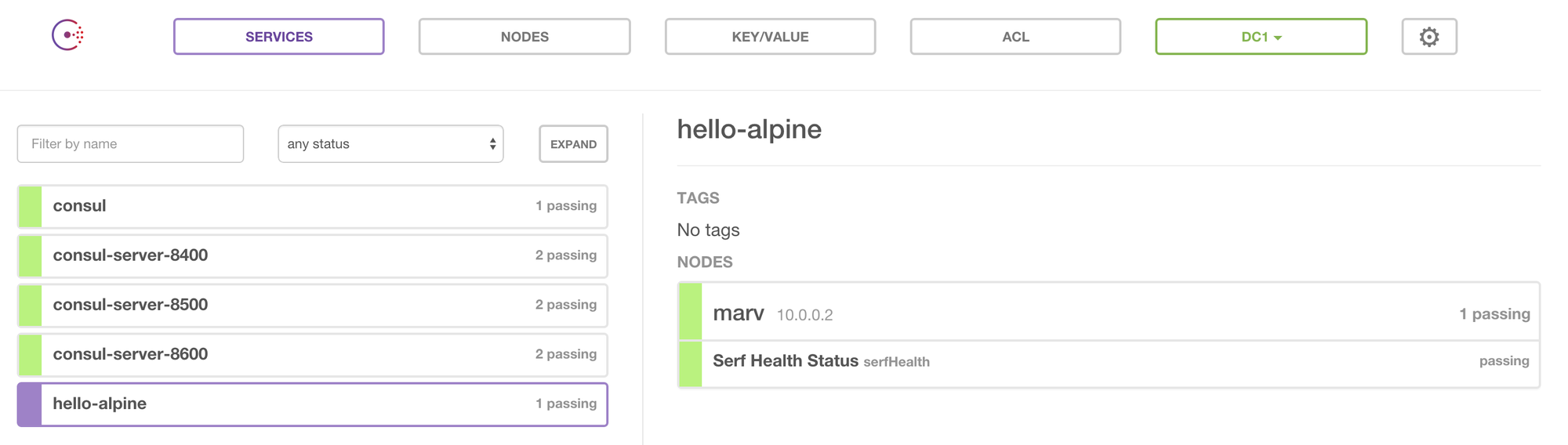

Refresh your browser!

We can see our app has been registered!

Using the Discovery mechanisms

Okay, so with a docker image that has some networking tools available, lets see what we can see!

$ docker pull joffotron/docker-net-tools:latest

$ docker run -it joffotron/docker-net-tools

/ #

This will dump you into a sh shell.

Let’s try some things out:

$ dig @172.17.42.1 hello-world.service.consul

; <<>> DiG 9.10.2 <<>> @172.17.42.1 hello-world.service.consul

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 15649

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; WARNING: recursion requested but not available

;; QUESTION SECTION:

;hello-world.service.consul. IN A

;; ANSWER SECTION:

hello-world.service.consul. 0 IN A 10.0.0.2

;; Query time: 6 msec

;; SERVER: 172.17.42.1#53(172.17.42.1)

;; WHEN: Fri Jul 17 05:16:14 UTC 2015

;; MSG SIZE rcvd: 88So we can see we got 10.0.0.2 as the hostname for the hello-world app. But what about the port? We can look up the Service Record:

$ dig @172.17.42.1 hello-world.service.consul SRV

; <<>> DiG 9.10.2 <<>> @172.17.42.1 hello-world.service.consul SRV

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 61022

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; QUESTION SECTION:

;hello-world.service.consul. IN SRV

;; ANSWER SECTION:

hello-world.service.consul. 0 IN SRV 1 1 8080 marv.node.dc1.consul.

;; ADDITIONAL SECTION:

marv.node.dc1.consul. 0 IN A 10.0.0.2

;; Query time: 1 msec

;; SERVER: 172.17.42.1#53(172.17.42.1)

;; WHEN: Fri Jul 17 05:22:23 UTC 2015

;; MSG SIZE rcvd: 148We see we get port 8080. Cool! What about over REST?

$ curl http://172.17.42.1:8500/v1/catalog/service/hello-world

[

{

"Node":"marv",

"Address":"10.0.0.2",

"ServiceID":"d7492c56c92a:sad_leakey:8080",

"ServiceName":"hello-world",

"ServiceTags":null,

"ServiceAddress":"",

"ServicePort":8080

}

]And the app is available there:

$ curl http://10.0.0.2:8080/

<!--<span class="hiddenSpellError" pre="" data-mce-bogus="1"-->DOCTYPE html>

HelloWorld

Hello WorldKey/Value Store

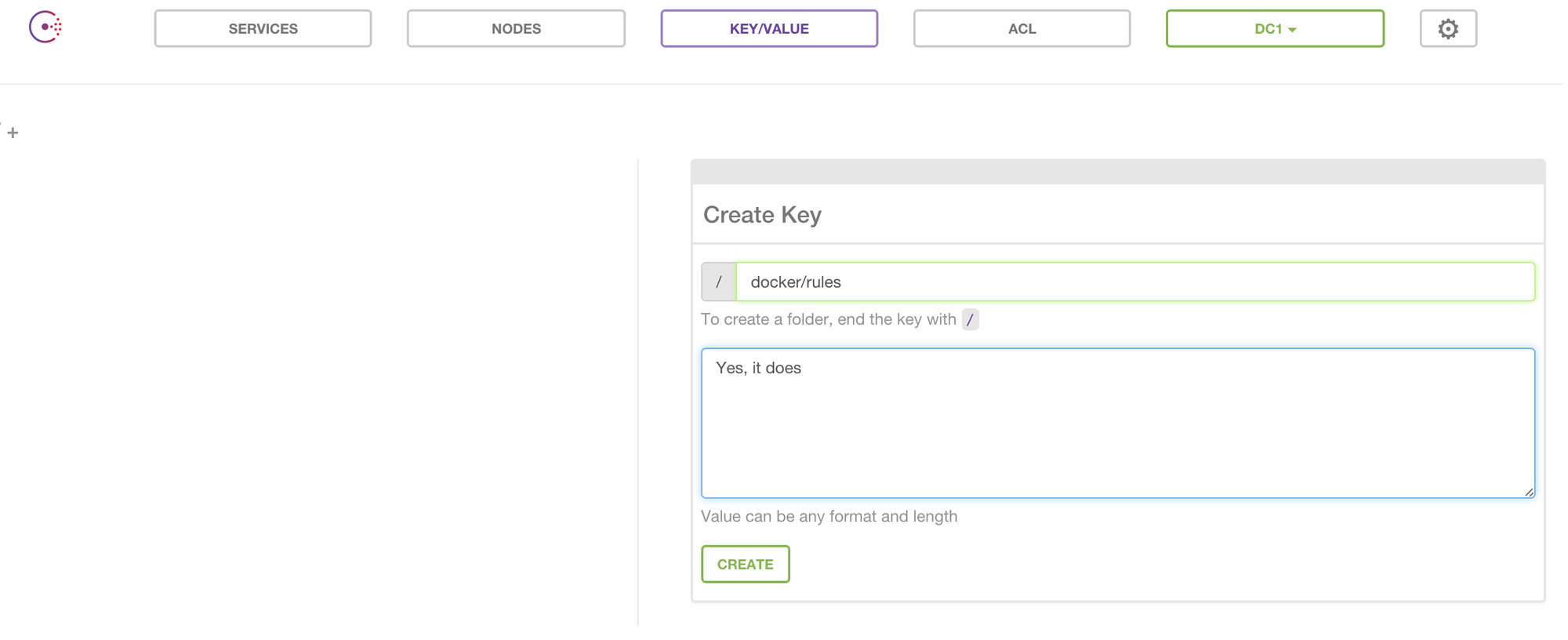

We can also use Consul to store key/value pairs, such as dynamic configuration data:

$ curl http://172.17.42.1:8500/v1/kv/docker/rules

[

{

"CreateIndex":72,

"ModifyIndex":72,

"LockIndex":0,

"Key":"docker/rules",

"Flags":0,

"Value":"WWVzLCBpdCBkb2Vz"

}

]

# WWVzLCBpdCBkb2Vz is "Yes, it does", base64 encoded ;)Next steps!

The next things we would want to do are:

- Get Consul working in a resilient cluster, running on AWS. See https://aws.amazon.com/blogs/compute/service-discovery-via-consul-with-amazon-ecs/

- Set up Consul Health checks. This allows for Consul to monitor the health of your services, and can act as an easily configurable Circuit Breaker

- Use something like

docker-composeto simplify and automate the setup of Consul for local development, and make sure it’s running