Automated infrastructure testing with ServerSpec

By using automated rspec testing of our real infrastructure systems, We reduced the uncertainty around its operating environments, and decreased the amount of manual correctness checking that engineers have to do when provisioning new systems.

So, what’s the problem?

We have some “legacy managed” infrastructure, built by hand over several years. As some of our critical software runs on this platform, we recognised the need for a Disaster Recovery environment to mirror that infrastructure, in the case of large failures in that environment.

This presented several issues:

- That environment was built in several stages, and was inconsistent across many machines, including installed packages and configuration

- New capacity in that environment was sometimes added by hand, sometimes by cloning existing machines.

- We needed to verify the new disaster environment is configured the same way as the system it is mirroring.

- In the past this kind of thing had been manually done by an engineer, a costly, time-consuming, and error prone job.

Introducing Serverspec!

When you are purely dealing with software, the solution to these problems is obvious – automated testing! What if we could regularly run a set of tests, and have them tell us if an environment is “correct” or not? We can! Serverspec builds on top of rspec and allows you to test the state of running hardware by accessing those machines, and asserting the state of files, running processes, etc., etc. In addition to that, we also used nodespec (written by one of our own engineers), which adds some nice features around configuring connections to our servers.

Serverspec provides a nice DSL around testing common aspects of running systems, e.g. a test we use to check that statsd is set-up correctly:

shared_examples_for 'it is the statsd endpoint' do

describe file('/shared/redbubble/statsdConfig.js') do

it { should be_file }

its(:content) { should match %r|port: 8126| }

its(:content) { should match %r|flushInterval: 60000| }

end

describe port(8126) do

it { should be_listening.with('udp') }

end

endHow we implemented Serverspec:

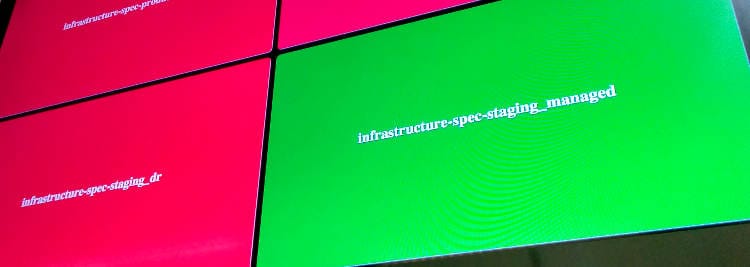

Our environments

For this piece of work, we roughly had four different environments to deal with. There was staging and production, and for each of those, there was the managed environment, and its counterpart DR version on cloud. Each of these had differences from each other, in the number of servers, in the layout of the services installed on them, configuration values, and a few places where versions differed.

How did we organise our specs to cope with that?

It sounds like a lot of complexity is involved in dealing with all the environments described above! You would be right too. In order to deal with it, we structured our test code into several “layers”:

- Spec-file per deployment environment (e.g. production-managed), composed of:

- Spec per-server, composed of:

- Behavioural examples, composed of:

- Individual rspec tests

- (and more composed behaviours)

- Behavioural examples, composed of:

- Spec per-server, composed of:

Here is a part of one of the top-level environment specs :

require 'spec_helper'

environment = EnvironmentFactory.ey_managed_production

environment.nodespecs_for_all_hosts.each do |nodespec_config|

describe "Node #{nodespec_config['host']}", nodespec: nodespec_config do

it_behaves_like 'a base server', environment

it_behaves_like 'a server with the firewall', environment

end

end

environment.app_servers.each do |index|

describe "App Server #{index}", nodespec: environment.nodespec_for("prod-app#{index}") do

it_behaves_like 'an app server', environment.merge(unicorn_worker_count: index < 15 ? 10 : 5)

it_behaves_like 'a syslog-ng publisher', environment

end

end

describe 'prod-rabbitmq', nodespec: environment.nodespec_for('prod-rabbitmq') do

it_behaves_like 'rabbitmq', environment

it_behaves_like 'it has the shared NFS mount', environment

it_behaves_like 'a syslog-ng publisher', environment

endHere, on lines 5, 12 and 19, we have blocks that describe individual servers in the environment. The first two describe groups of servers (i.e. all hosts, or application servers), without having to individually list each one. The last block describes our RabbitMQ server, by referring to it via its’ hostname.

Each of these blocks refers to an rspec shared example, which describes a behaviour we want a server to have. These can be very high-level (e.g. behave like “an app server”), or be more specific (“has the NFS shared mount”).

Let’s have a look at an example:

shared_examples 'an app server' do |environment|

it_behaves_like 'a unicorn server', environment

it_behaves_like 'it has the shared NFS mount', environment

it_behaves_like 'it has ruby installed', '1.9.3p484'

it_behaves_like 'it has the application deployed', environment

it_behaves_like 'an nginx server', environment

it_behaves_like 'uploading logs to S3', environment.merge(server_role: 'appserver')

it_behaves_like 'has geoip capabilities'

endHere, we can see that these behaviours are further composed of other behaviours, which themselves look like:

shared_examples_for 'it has the shared NFS mount' do |environment|

describe file('/shared') do

it { should be_mounted.with(rw: true, type: 'nfs') }

end

describe file('/shared/nfs') do

it { should be_readable.by_user(environment[:user]) }

it { should be_writable.by_user(environment[:user]) }

end

describe file('/etc/fstab') do

its(:content) { should match /#{environment.nfs_hostname}:\/data\s*\/shared\s*nfs/ }

end

end

endManaging configuration differences

Okay, so that’s cool. But you still haven’t told me how that helps with all the different environments!?

You may have noticed the environment = EnvironmentFactory.ey_managed_production line at the top of the first example. This is a convenient method we created to provide an object that has all the specifics of the environment under test. This includes essentially a hash of values, as well as convenience methods, which are used in the specs.

Let’s break it down a little:

class EnvironmentFactory

#...

COMMON = {

memcached_memusage: 1024,

nfs_exported_dir: '/data'

}

STAGING = {

environment: 'staging',

rails_env: 'staging',

database: 'staging',

}

STAGING_MANAGED = {

unicorn_worker_count: 2,

memcached_memusage: 64,

app_server_external_ip: '99.99.99.99',

nfs_exported_dir: '/shared',

syslog_ng_endpoint: 'tm99-s00999',

memcached_hostname: 'tm99-s00998',

max_connections: 300

}

#...

def self.ey_managed_staging

EyManagedEnvironment.new(COMMON.merge(EY_MANAGED).merge(STAGING).merge(STAGING_MANAGED), :staging)

end

#...

endThe EnvironmentFactory.ey_managed_staging method pulls in the series of configuration hashes (which lets us set common parameters, and then override them for specific environments), and returns an Environment object:

class Environment

def initialize(params)

@params = params

end

#... hash access ...

def [](key)

@params[key]

end

def []=(key, value)

@params[key] = value

end

#... convenience methods ...

def app_servers

(1..@params[:app_servers])

end

#... and the nodespec configuration for connecting to machines ...

def nodespecs_for_all_app_servers

as_nodespec(hosts_matching('app'))

end

def as_nodespec(hosts)

hosts.map do |hostname|

{

'adapter' => 'ssh',

'os' => 'Gentoo',

'user' => @params[:user],

'rails_env' => @params[:rails_env],

'host' => hostname,

}

end

end

endclass EyManagedEnvironment < Environment

# ... more env-specific convenience methods ...

def app_master_host

staging? ? 'staging-app1' : 'prod-app1'

end

endThis way, by passing the environment around to the specs, we can refer to things like the amount of memory memcached is expected to use in a common way, but with all the environment-specific information kept in one easy-to-update place!

Running specs & the board

The specs for an environment are simply run by issuing:

$ rspec spec/staging_spec.rb -f d

Node staging-app1

behaves like a base server

behaves like a network service

Package "ntp"

should be installed

Service "ntpd"

should be running

behaves like a server monitored by NewRelic

Package "newrelic-sysmond"

should be installed

Service "newrelic-sysmond"

should be enabled

should be running

... etc ...We then set this up on a Jenkins server, with a visible dashboard, so we can easily see when something has gone wrong!

The many benefits of infrastructure testing

These suites of tests took a lot of time and effort for our team to retroactively produce for all our systems – so was it worth it? The answer is absolutely, yes. We saw a number of immediate wins:

- When adding server capacity in our manual, managed environment, we can rapidly verify that the servers are provisioned correctly, cutting many dozens of engineer-hours from the time taken.

- We now had a good, version controlled place to record our infrastructure configuration, including the layout of servers, which services are running where, and when things are different.

- It gave us a way of verifying our `chef` recipes were doing the right thing in our cloud-based environments

- It gave us a high degree of confidence in our Disaster Recovery environment, and let us switch live traffic over to it with minimal issues.